Fake Twitter accounts politicized discourse about vaccines

Activity from phony Twitter accounts established by the Russian Internet Research Agency (IRA) between 2015 and 2017 may have contributed to politicizing Americans’ position on the nature and efficacy of vaccines, a health care topic which has not historically fallen along party lines, according to new research published in the American Journal of Public Health.

The findings, based on machine learning analysis of nearly 3 million tweets from fake accounts, expose a general threat made startlingly more relevant in the face of the pandemic caused by the novel coronavirus, according to Yotam Ophir, an assistant professor of communication in the University at Buffalo College of Arts and Sciences, who co-authored the study.

“There is a real danger of health topics being politicized and used as propaganda tools. If that happens for topics such as coronavirus, people would be inclined to evaluate the importance and veracity of health messages—from either health experts, politicians, or trusted media outlets—based on how it reflects their political leanings,” says Ophir, an expert in computational modeling, media effects and persuasion.

“If people perceive health topics as being aligned with a political agenda, whether it’s left or right, then they will consequently begin to lose trust in health organizations and question their objectivity.”

To understand why this might only be the beginning of more intense polarization is to understand that the threat posed by polarizing health care topics may be an unintended side effect of Russian attempts to influence other political discussions, including topics tied closely to the 2016 U.S. presidential election.

“I don’t believe the Russians wanted to sow discord around vaccines specifically, but rather chose to harness social tensions around vaccines in order to make the Republican characters they created appear more Republican and the Democratic characters they created to appear more Democratic. This intensifies a recently emerging divide where one previously did not exist.”

The Russians’ intentions in this particular case, however, don’t matter when considering the implications for public health, according to Ophir. What is pertinent is that the IRA used a public health topic to serve its own strategic and political needs that targeted Republicans and Democrats with different messages. If that proves effective, the Russians will ramp up their misinformation campaign, moving from what might be an unplanned outcome to a more persistent and focused effort.

“In recent years, we see the change already with Republicans starting to lose trust in vaccines while Democrats seem unmoved,” Ophir says. “Again, I don’t think the Russians care about vaccines, but along the way they created and intensified this emerging divide.

“Now they can target each party with different messages, spreading misinformation unequally, targeting susceptible groups with lower trust in government and science.”

Ophir’s paper with Dror Walter, an assistant professor of communication at Georgia State University, and Kathleen Hall Jamieson, director of the Annenberg Public Policy Center at the University of Pennsylvania, began as a conversation at a 2018 conference, after it was first discovered that Twitter troll accounts were discussing non-political topics such as vaccines.

At around the same time, Jamieson published “Cyber-War,” a book about Russian interference in the 2016 presidential election that identified thematic personas among Twitter trolls. These personas are designated topical and linguistic roles played by each fake account.

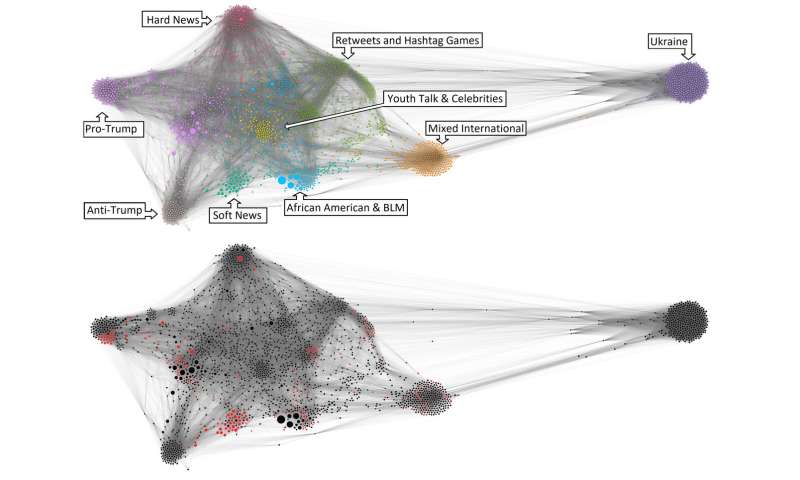

Inspired by Jamieson’s work, previous research and Ophir’s focus on connecting health misinformation and politics, the team used computational methods to identify nine personas among nearly 2,700 accounts.

The pro-Trump personas were more likely to express anti-vaccine sentiment, while anti-Trump personas expressed support for vaccines. Accounts falling under the persona type mimicking African Americans and Black Lives Matter activists also expressed more anti-vaccine messages.

The researchers used their own method, the Analysis of Topic Model Networks, to identify patterns among the nearly 3 million tweets and network analysis that treats each topic as a node in a semantic network.

This form of unsupervised machine learning finds associations and clusters that are beyond human reach.

“I have reason to strongly believe, though we don’t have the data, that Russia and other countries who try to interfere in our political discourse will use coronavirus to spread misinformation and rumors to solidify the relationships they’re building with new troll accounts that replace the ones removed by Twitter,” says Ophir.

“The virus is not political, but when any health topic becomes a political matter at the expense of fact, the result is to base conclusions and make decisions, such as whether to social distance or not, on party loyalty, not science.

Source: Read Full Article