Designing better contact-tracing apps for the next pandemic

As COVID-19 began to spread last spring, apps were developed to track cellphone signals and other data so people who had been near those who were infected could be notified and asked to quarantine. The novel coronavirus rapidly outpaced such efforts, becoming so widespread that tracing individual exposures could not contain it.

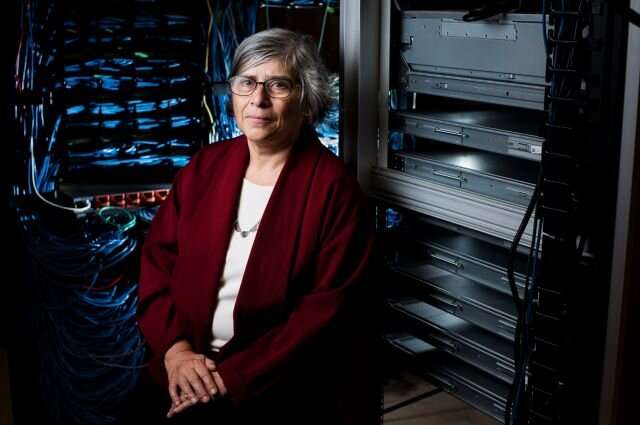

But the issues raised by digital contact tracing—about privacy, effectiveness, and equity—still need to be addressed, says Tufts cybersecurity expert Susan Landau. That’s because a future public health crisis is likely to inspire calls to collect such information once again.

“We now have this infrastructure, and there will be another pandemic,” says Landau, Bridge Professor in Cyber Security and Policy at The Fletcher School and the Tufts School of Engineering. “When another highly infectious respiratory disease starts to spread, we need to know how to design the apps so that their use is efficacious, enhances medical equity, and is, of course, privacy protective.”

Landau discusses the challenges of digital contact tracing in her new book, People Count: Contact-Tracing Apps and Public Health.

Tufts Now spoke with her to understand how technology could be deployed better to protect public health during a pandemic, while still ensuring privacy. Here are five takeaways from the conversation.

The limitations of current technology should be acknowledged

Smart as they are, smartphones and their signals still can’t give us the information we might want. For example, the short-distance Bluetooth signals used in some COVID-19 tracking apps can be affected by the environment in which the app operates.

When one such app was tested in a simulation of a subway car, with people sitting close to each other inside a large gymnasium, the Bluetooth radio signals traveled as expected and could be used to identify people near each other.

But when researchers in Dublin tested the apps on actual trams, they discovered that the signals bounced off the metal connectors between the cars, making them seem stronger and providing unreliable information about how close people really were, Landau says.

Even worse, such digital methods to measure proximity don’t capture other important information, such as whether there was a wall between two people, whether they were indoors or out, or whether they were sitting quietly wearing masks or singing and shouting without masks, Landau says. Such imprecision limits their efficacy.

Also, if there isn’t widespread random testing of the population, no form of contact tracing will identify exposure to asymptomatic carriers who don’t realize they are infected. In the case of COVID-19, people without symptoms have been responsible for a great deal of transmission.

Storing and sharing location data, and centralized information-gathering, should be avoided

In South Korea last year, a public database started out sharing the gender, ages, and locations of people who were diagnosed with COVID, and it became very easy to identify the people, Landau says. Tracking your location can convey a lot of private information, such as whether you go to a church at the time that AA meetings are held or you spend weekends in a neighborhood known for its LGBTQ nightlife.

Centralizing data-gathering with a government agency can also be problematic. During the current pandemic, information collected in the name of protecting public health has been shared with other agencies, such as police, in some countries, Landau says. She argues that this should not be done, in part because it will reduce people’s willingness to use the apps.

Apple, Google, and a group of cryptographers in Europe and the U.S. worked with epidemiologists to create systems that protect privacy and are decentralized. The Google Apple Exposure Notification system, for example, does not collect location information and will not share identification information with centralized authorities.

Instead, it tracks proximity and notifies users if they were close to someone with COVID, without identifying the infected person or the location of the exposure. Landau prefers these approaches, which are the only types of contact-tracing apps allowed in the European Union.

What happens after you learn you’ve been exposed to a disease may be just as important as the notification itself

While apps that provide decentralized exposure notification are great for protecting privacy, they don’t rely on contact tracers and thus lose some of the personal touch and social support that’s central to traditional contact tracing done by people with extensive training, Landau says.

Before even asking where an infected person has been and with whom, those contact tracers ask questions like, “How can I help you? Do you need food delivered? Do you have someone who can care for you?”

That said, decentralized, privacy-protecting apps can be paired with such supports. In Switzerland, for example, if the app says you’re exposed and the health ministry agrees that you should quarantine, “you get financial support to stay at home,” Landau says.

In Ireland, an app allows you the choice to give your phone number. “If you give your phone number, of course you’re not anonymous, but if you are exposed, you get called by the contact tracers,” who can help you figure out next steps, Landau says.

Future apps should consider the benefits of offering such support, rather than leaving people alone with information about potential exposures, she argues.

Adoption of apps should be voluntary, information gathered should be used only to protect public health, and the technology should be transparent

Coercion should have no part in contact tracing, Landau says, and information should never be shared with other government agencies. Governments should invest in evaluation prior to and during deployment of contact-tracing apps in diverse communities to be sure they are serving the whole population. And the public should be able to understand how the apps work.

“You need to be careful not to deploy resources that help the communities that are already doing well and don’t help the other communities,” she says.

Trust is central to making contact tracing work

Undocumented immigrants may be reluctant to share their information because of fears of government prosecution. Black Americans, whose communities have been mistreated by the medical establishment and who have been heavily policed, may also mistrust authorities more than other groups, Landau says. “Uptake of the apps is not going to be the same in these different communities,” she says. “Cultural issues need to be taken into account.”

The first step in building trust is protecting user safety, according to Landau. “False positives don’t protect user safety, especially in poor communities,” she says. “They tell people that they can’t go to work when in fact they’re fine.” To increase trust in an app, notification of a potential exposure should be paired with free, easy-to-access testing, she says.

The computer scientists who developed the privacy-protecting apps “did a really good thing by moving the conversation from centralized apps to decentralized apps,” Landau says. “But what they didn’t do, because they didn’t have enough public health people in the room, is ask: Are we solving the right problem?”

The larger problem, she says, is about equity. That includes not just factors like who owns the latest smartphones but also issues like how hard it is to get an appointment for a COVID-19 test or a vaccine—and, says Landau, how much outreach is done in communities that are hesitant to be vaccinated, to address concerns and help people make informed decisions.

Source: Read Full Article